- What Is an AI Tech Stack?

- 8 Essential Layers of an AI Tech Stack

- Core Components of an AI Tech Stack

- 8 Best Practices for Building and Managing an AI Technology Stack

- Emerging Trends Shaping The Future of AI Tech Stacks

- How Space-O Technologies Can Be Your AI Co-Pilot

- Frequently Asked Questions on AI Tech Stack

Comprehensive Guide to AI Tech Stacks

Have you ever marveled at how AI can recommend the perfect movie, predict the weather, or even drive a car? It’s mind-blowing, right? But what’s even more fascinating is what’s happening behind the scenes to make all this possible.

Building an AI system is like constructing a house. You wouldn’t start putting up walls without laying a solid foundation first, would you? An AI tech stack is just that – the essential tools needed to create those amazing AI applications we rely on every day.

For those AI development services, understanding the tech stack is key. These services encompass everything from selecting the right frameworks to deploying AI solutions that can handle complex tasks, making it easier for businesses to bring AI innovations to life.

Choosing the right AI frameworks is crucial, as they serve as the building blocks that determine an application’s scalability, performance, and ultimate success

In this blog, we’re going to take an informative dive into an AI tech stack. We’ll break down the key components, layers, frameworks, and emerging trends in the field of AI.

Ready to geek out on the tech that’s shaping our future? Let’s jump in and explore the cool and complex world of the AI tech stack together.

What Is an AI Tech Stack?

An AI tech stack is essentially a collection of ai development tools, frameworks, and technologies that are used in collaboration to create intelligent applications. You could say it’s the blueprint for constructing smart systems. Encompassing everything from data acquisition and storage to the development and deployment of intricate AI models, an AI tech stack is a dynamic ecosystem constantly evolving with new technologies and techniques.

In 2025, generative AI emerged as the most talked-about technology, driving innovation across industries. In fact, according to a report by Goldman Sachs, investment in Artificial Intelligence is expected to skyrocket to $200 billion by the end of 2025. That’s a staggering figure and a testament to how crucial and transformative AI is becoming. This surge in interest further highlights the importance of a well-structured AI tech stack, as it plays a crucial role in developing and deploying advanced AI systems.

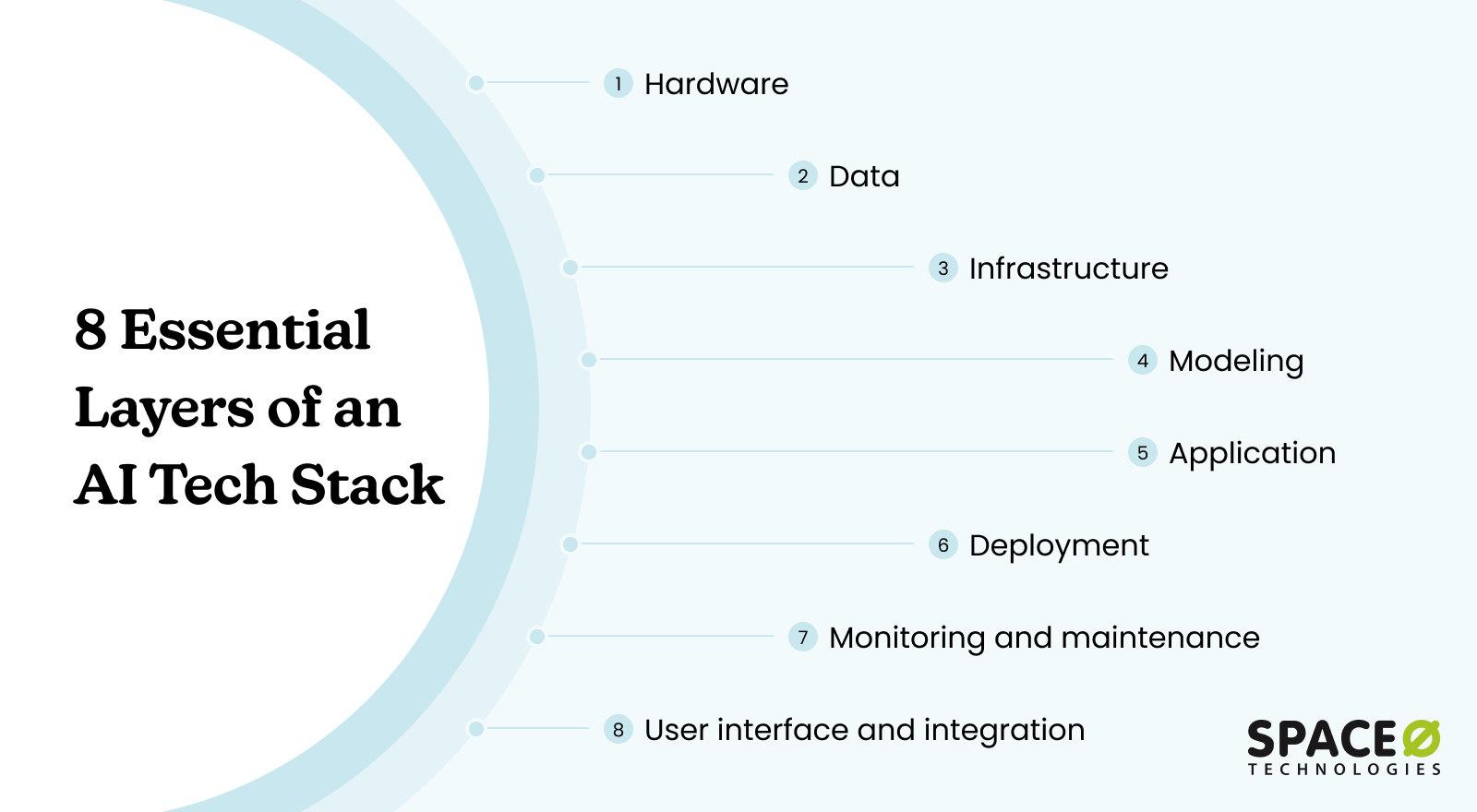

8 Essential Layers of an AI Tech Stack

Building an AI system involves a complex and layered tech stack, each component playing a critical role in the overall functionality and efficiency of the system.

Let’s explore these layers in detail.

Layer 1: Hardware

Let’s start with CPUs, the general-purpose processors in our computers. They’re good for a lot of things, but when it comes to AI, you often need more muscle. That’s where GPUs (Graphics Processing Units) come in. GPUs are like the bodybuilders of the tech world, handling the heavy lifting required for training AI models. Then there are TPUs (Tensor Processing Units), which Google designed specifically to speed up machine learning tasks. They’re like the race cars of computing, built for speed and efficiency in AI.

Now all that data you use has to go somewhere, right? You’ve got SSDs and HDDs for storage; while SSDs are super fast but a bit pricey, HDDs offer more space at a lower cost. For really big data, you can use distributed storage systems like HDFS or cloud storage options like Amazon S3. These systems spread the data across multiple machines, making it easier for you to handle and access it.

Layer 2: Data

Now, let’s talk about the data layer. This is all about gathering, storing, and processing the data that your AI models will learn from.

So, data comes from all sorts of places. You have sensors and IoT (Internet of Things) devices collecting data from the physical world, like temperature sensors or smart home devices. There’s also web scraping, where you use tools like BeautifulSoup to pull data from websites. And don’t forget APIs, which let you grab data from platforms like Twitter.

Once you’ve collected the data, you need to store it. You can use databases like MySQL for structured data and NoSQL databases like MongoDB for unstructured data. For massive amounts of raw data, you can use data lakes, which are like giant storage pools.

Before you can use the data, it often needs some cleaning and transforming. This is where ETL (Extract, Transform, Load) processes come in. They help you refine the data into a usable format. Tools like Apache NiFi and Talend are really handy for this.

Layer 3: Infrastructure

This layer encompasses the platforms and environments where AI models are developed, trained, and deployed.

Cloud platforms like AWS, Google Cloud, and Microsoft Azure offer scalable compute resources, storage solutions, and AI services. They provide you the flexibility to scale up or down based on your workload requirements.

If you have specific security or compliance needs, you can opt for on-premises infrastructure, using private data centers equipped with the necessary hardware and software.

By combining cloud services and on-premises infrastructure, hybrid solutions offer you the best of both worlds, providing scalability and control.

Layer 4: Modeling

Modeling focuses on constructing and training AI models using machine learning frameworks like TensorFlow and PyTorch. These frameworks simplify the development process by providing tools and libraries for designing model architectures, configuring algorithms, and setting hyperparameters. Frameworks like TensorFlow and PyTorch allow developers to define complex models and refine them through iterative training processes.

Training AI models requires significant computational power, often necessitating the use of cloud platforms like Google AI Platform or AWS SageMaker. These platforms provide scalable resources and managed services to handle the intensive computational needs of model training.

Alternatively, some organizations opt for on-premises servers to maintain control over their hardware and data, especially if they have stringent security requirements. Both approaches have their benefits, with cloud platforms offering convenience and scalability, and on-premises solutions providing greater control and potentially lower long-term costs.

Layer 5: Application

The application layer is about putting AI to work. It’s how you integrate AI models into real-world applications.

Application is where AI models come to life and start making a real impact. Once you’ve got a model trained and ready, the next step is to plug it into your apps or systems. This might mean integrating it into software, setting up APIs for smooth communication, or deploying it as a service. For instance, you could add a recommendation engine to an online store to suggest products based on what users are browsing.

Layer 6: Deployment

Deploying AI models means getting them out of the lab and into the real world.

You need systems to serve your models so they can make real-time predictions. You can also run predictions in batches, like generating daily reports.

To make deployment easier, you can use containerization tools like Docker, which package up models and their dependencies. On the other hand, Kubernetes helps manage and scale these containers.

Continuous Integration and Continuous Deployment (CI/CD) are practices that help automatically test and deploy code changes. This also keeps AI models up-to-date and ensures they’re always performing well.

Layer 7: Monitoring and maintenance

Finally, AI models need to be kept an eye on to make sure they’re working as expected.

You can track how well your models are performing using metrics like accuracy and precision. You also watch for drift, which is when a model’s performance drops because the data it’s seeing in the real world has changed. You can collect logs to understand what’s happening under the hood and analyze usage data to improve your models. Protecting data and ensuring privacy is crucial. You can use encryption to keep data secure and follow regulations like GDPR to make sure you’re compliant.

Layer 8: User interface and integration

The final layer involves the interfaces and integrations that allow users and other systems to interact with AI models.

| Tool | Description | Example Use Case |

|---|---|---|

| APIs | Act like bridges, allowing different software systems to communicate. For AI, APIs enable integration of AI capabilities into applications without building models from scratch. | Use an API to access a pre-trained language model for adding NLP features to an app. |

| SDKs | Provide tools, libraries, and documentation to help integrate AI into applications. They include pre-built functions and example code. | Use an SDK to simplify the integration of AI features into your application. |

| Dashboards | Interactive interfaces that display data and insights generated by AI models, using visualizations like charts, graphs, and maps. | Use a dashboard to visualize sales predictions and identify trends. |

| Libraries | Tools like D3.js, Matplotlib, and Plotly used to build custom visual representations of data and model outputs. | Use libraries to create tailored visualizations of your data and model results. |

Got Big Ideas to Develop an AI App?

The Space-O technology team is here to help you build the tech stack to match.

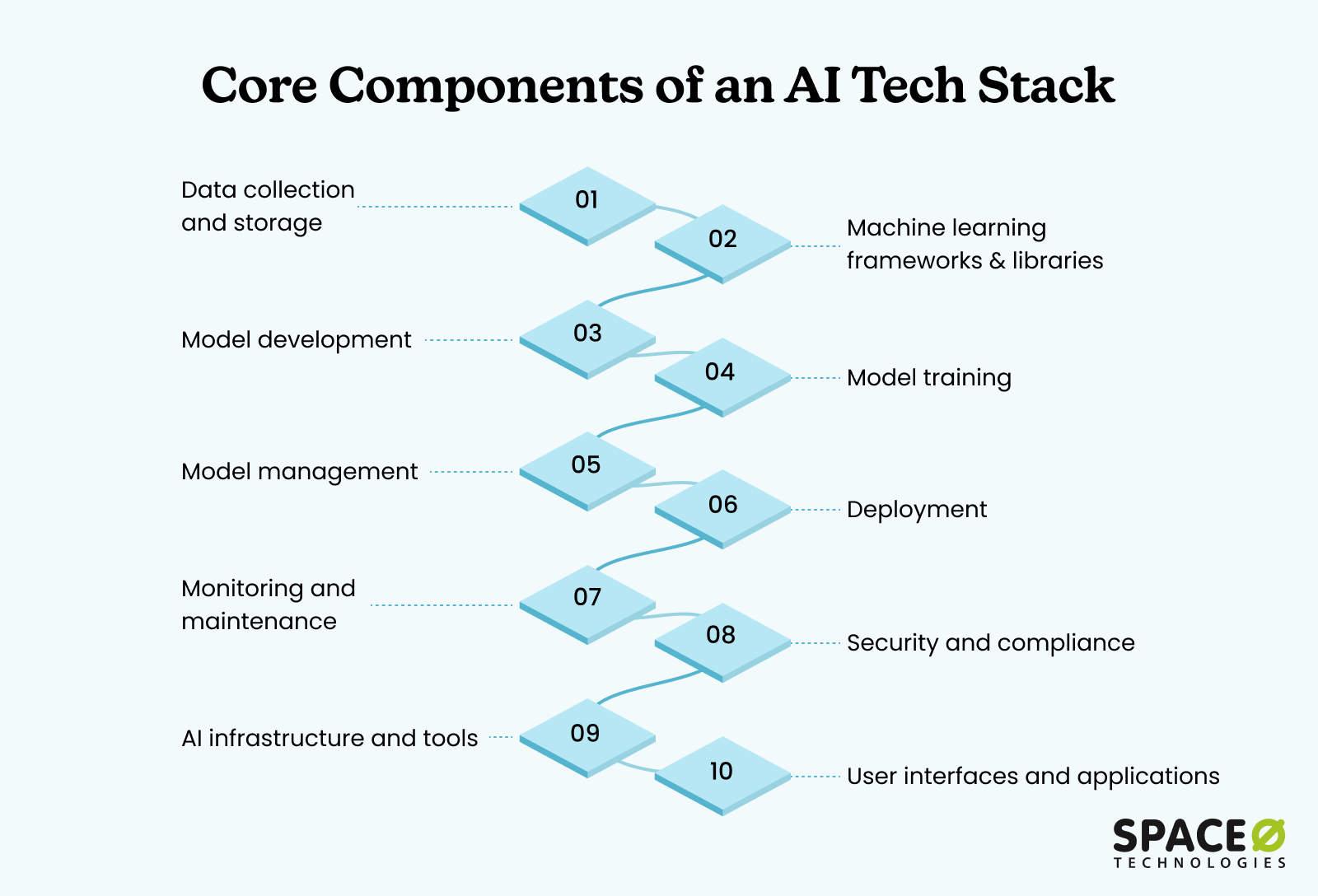

Core Components of an AI Tech Stack

A successful AI technology stack is built on several essential components, each playing a crucial role in the development, deployment, and maintenance of AI solutions for businesses.

Below is a detailed exploration of these components and their significance:

1. Data collection and storage

Purpose:

To gather and store the vast amounts of data required for training AI models. This component ensures that data from various sources is systematically collected, organized, and made accessible for further processing and analysis.

Key elements:

- Sources: Sensors, databases, web scraping, APIs

- Storage solutions: Relational databases, NoSQL databases, data lakes

Importance:

Efficient data collection and storage form the backbone of AI systems, enabling quick access and retrieval of vast datasets. This ensures that AI models have the high-quality data they need to learn and make accurate predictions.

2. Machine learning frameworks and libraries

Purpose:

To provide the essential tools and pre-built algorithms for developing AI models. Frameworks offer an integrated environment for AI development, while libraries provide reusable components and functions to support specific tasks within that environment.

Key frameworks:

- TensorFlow & PyTorch: For building and training models

- scikit-learn: For simpler machine learning tasks

Importance:

These frameworks and libraries simplify the complex process of developing AI models, allowing for faster experimentation and optimization. They provide the tools needed to implement advanced algorithms and refine models for better performance.

Fun Fact: According to McKinsey, Amazon’s $775 million acquisition of Kiva reduced the ‘Click to ship’ time from 60-75 minutes to just 15 minutes. This highlights the significant impact of integrating machine learning and automation on operational efficiency and cost savings.

3. Model development

Purpose:

To design and construct AI models tailored to specific tasks or challenges. This involves selecting appropriate algorithms, defining model architectures, and configuring hyperparameters. The goal is to create models that can effectively analyze data and provide accurate predictions or decisions.

Key tasks:

- Algorithm selection: Choosing the right algorithm for the task

- Model architecture design: Structuring the model to optimize performance

- Hyperparameter tuning: Adjusting parameters to improve model accuracy

Importance:

Model development is crucial as it defines the architecture and behavior of the AI system. A well-designed model is essential for accurately interpreting data and solving specific problems.

4. Model training

Purpose:

To teach AI models to recognize patterns and make decisions based on data. During training, models are exposed to large datasets and learn by adjusting their parameters to minimize errors.

Key considerations:

- Hardware: Utilize GPUs or TPUs for computational efficiency

- Training process: Models learn patterns by minimizing errors through iterative adjustments

Importance:

Proper training ensures that the model can generalize well to new data, making it effective in real-world applications. This process is critical for developing a model that performs well on unseen data.

5. Model management

Purpose:

To organize, version, and maintain AI models throughout their lifecycle. This component ensures that different models, along with their versions, are systematically tracked and documented.

Key aspects:

- Version control: Managing different iterations of models

- Documentation: Keeping detailed records of model configurations

- Deployment readiness: Ensuring models are ready for production use

Importance:

Effective model management ensures that models are organized and easily accessible, facilitating smooth transitions between development, testing, and deployment phases.

6. Deployment

Purpose:

To make AI models operational by integrating them into production environments where they can be used in real-time applications. This process involves packaging models with their dependencies and deploying them across various environments.

Key Tools:

- Containerization: Docker packages models with all necessary dependencies

- Orchestration: Kubernetes manages the deployment and scaling of containerized models

Importance:

A smooth deployment process ensures that AI models are accessible and can scale efficiently, enabling them to deliver value in real-world applications.

7. Monitoring and maintenance

Purpose:

To continuously oversee the performance of AI models and ensure they remain effective over time. This involves tracking key metrics, detecting issues like model drift, and making necessary updates.

Key activities:

- Performance monitoring: Tracking metrics like accuracy and precision

- Model drift detection: Identifying when a model’s performance degrades over time

- Regular updates: Making improvements to maintain model effectiveness

Importance:

Ongoing monitoring and maintenance are essential to keep AI models performing optimally and adapting to changes in data or user needs.

8. Security and compliance

Purpose:

To protect sensitive data and ensure that AI systems operate within legal and regulatory frameworks. This includes implementing robust security measures and adhering to data protection regulations.

Key Practices:

- Encryption: Safeguard data both at rest and in transit

- Regulatory compliance: Adhere to standards like GDPR and CCPA

Importance:

Security and compliance are crucial for maintaining trust and ensuring that AI systems are protected from potential threats and operate within legal boundaries.

9. AI infrastructure and tools

Purpose:

To provide the computational resources and development environments necessary for building, training, and deploying AI models. This includes the use of cloud platforms, on-premises servers, and various software tools.

Key components:

- Infrastructure: Cloud platforms like AWS, on-premises servers

- Tools: Jupyter Notebooks, CI/CD pipelines for development and deployment

Importance:

AI infrastructure and tools support the entire AI workflow, ensuring that developers have the resources they need to work efficiently and effectively across all stages of the AI development lifecycle.

10. User interfaces and applications

Purpose:

To enable end-users to interact with AI models and benefit from their insights. This involves creating intuitive interfaces that allow users to easily access and utilize AI-driven features.

Key elements:

- Interfaces: Dashboards, APIs, web and mobile applications

- Integration: Seamlessly incorporate AI into end-user applications

Importance:

Well-designed user interfaces and applications ensure that AI models deliver practical value and are accessible to those who need them, making AI solutions user-friendly and impactful.

Struggling to Implement AI Effectively?

Get expert guidance on building your ideal application using AI tech stack with Space-O.

8 Best Practices for Building and Managing an AI Technology Stack

A well-structured AI technology stack is essential for the successful development and deployment of AI applications.

Here are some best practices to guide you:

1. Align your tech stack with business objectives

Before you dive into building your AI tech stack, it’s crucial to have a clear vision of what you want to achieve. Start by defining your AI goals and identifying the specific use cases you want to tackle. Once you have that clarity, choose technologies that align directly with your business objectives. Your tech stack should be flexible enough to grow with your business, so keep scalability in mind from the outset.

2. Prioritize data quality and management

In AI, your models are only as good as the data you feed them. That’s why investing in data cleaning, validation, and enrichment is non-negotiable. Establish robust data governance policies to maintain consistency and accuracy across your datasets. Additionally, select data storage and processing tools that can efficiently handle large volumes of data without breaking a sweat.

3. Optimize compute resources

The hardware you choose plays a big role in the performance of your AI models. For general data processing tasks, CPUs might do the job, but for more intensive tasks like deep learning, GPUs are often the better choice. Cloud computing is a great way to access scalable resources without hefty upfront costs. If your AI tasks are particularly demanding, consider exploring specialized hardware like TPUs for a significant boost in performance.

4. Embrace a modular and flexible architecture

A modular tech stack is like a well-organized toolbox—each component has its place and can be easily swapped out or updated as needed. Choose tools that offer open APIs, so integration with other systems is seamless. Consider adopting a microservices architecture, which breaks your AI system into smaller, independent components. This approach not only improves scalability but also makes maintenance much more manageable.

5. Invest in MLOps

Managing AI models efficiently requires more than just good code; you need a solid MLOps platform. MLOps help streamline model deployment and ongoing management, making the process much smoother. Automating repetitive tasks frees up your team to focus on innovation, and setting up CI/CD pipelines ensures that updates and new models are integrated into production seamlessly.

6. Monitor and optimize performance

Even after your AI models are up and running, the work doesn’t stop. Continuous monitoring is essential to ensure your models are performing as expected. Be proactive in identifying areas for improvement and retrain models as needed to maintain their accuracy. Tracking key performance indicators (KPIs) will help you measure the impact of your AI initiatives and ensure they’re delivering value to your business.

7. Ensure security and compliance

Protect sensitive data with strong security measures, like encryption and access controls. Make sure your AI operations adhere to industry regulations and compliance standards, such as GDPR or HIPAA. Regularly review and update your security protocols to keep up with evolving threats.

8. Build a strong AI talent team

Your AI tech stack is only as good as the team behind it. Invest in hiring skilled data scientists, machine learning engineers, and MLOps experts. Support continuous learning and development to keep your team at the top of their game. Finally, foster a culture that values innovation and experimentation—this is where the real breakthroughs happen.

Emerging Trends Shaping The Future of AI Tech Stacks

The world of AI is advancing at a breakneck pace, and with it, the technology stacks that power these innovations are also evolving. As we look ahead, several key trends are set to shape the future of AI tech stacks.

Let’s dive into what’s on the horizon:

1. Democratization of AI

One of the most exciting trends is the ongoing democratization of AI. As AI becomes more accessible, we’ll see a broader range of people—beyond just data scientists and engineers—getting involved in AI development.

- Being able to build AI models without writing a single line of code. That’s where low-code and no-code platforms come in. These tools are designed to simplify AI development, making it easier for people with little to no coding experience to create AI-driven solutions. This shift is opening the door for more businesses and individuals to experiment with AI.

- AutoML (Automated Machine Learning) is another game-changer. It automates many of the steps involved in creating machine learning models, from data preprocessing to model selection and tuning. As AutoML tools continue to improve, we can expect faster experimentation, quicker deployment, and ultimately, more innovation in AI.

2. Edge computing and AI

As AI continues to integrate more deeply into our lives, the way we process and analyze data is changing too. Edge computing is at the forefront of this shift.

- Instead of sending all data to a central server, edge computing allows data to be processed closer to its source—whether that’s on a smartphone, a sensor, or another device. This reduces latency, enhances privacy, and allows for faster decision-making. As edge computing becomes more prevalent, we’ll see more AI applications that operate in real-time, without relying on the cloud.

- The IoT is already transforming industries like healthcare, agriculture, and manufacturing. Now, with AI capabilities being integrated directly into IoT devices, these technologies are set to become even more powerful. AI-powered IoT devices can analyze data on the fly, leading to smarter, more responsive systems.

3. Quantum computing and AI

Quantum computing is one of the most talked-about technologies in the AI space, and for good reason. It has the potential to revolutionize the field by solving problems that are currently beyond the reach of even the most powerful classical computers.

- Quantum computers can process information at speeds that are exponentially faster than today’s machines. This could dramatically accelerate AI development, enabling us to tackle complex problems—like drug discovery, climate modeling, and financial forecasting—that require immense computational power.

- Quantum computing isn’t just about speed; it could also lead to the creation of entirely new types of AI algorithms and models. These quantum-powered models might solve problems in ways we can’t even imagine today, opening up new possibilities for AI applications.

4. Data privacy and security

As AI becomes more prevalent, concerns about data privacy and security are growing. Thankfully, new approaches and technologies are emerging to address these challenges.

- Federated learning is an innovative approach that allows AI models to be trained on decentralized data sources, such as smartphones or edge devices, without the data ever leaving the device. This means you can build powerful AI models while keeping personal data private—a win-win for privacy-conscious users and businesses alike.

- As cyber threats become more sophisticated, AI is playing a crucial role in defending against attacks. AI-powered cybersecurity tools can detect and respond to threats in real-time, analyzing vast amounts of data to identify patterns and anomalies that might indicate a breach.

5. Specialized hardware acceleration

As AI models become more sophisticated, the need for specialized hardware that can handle these complex workloads is growing.

- Traditional CPUs aren’t always up to the task when it comes to AI. That’s why we’re seeing the continued development of AI-specific chips designed to optimize performance for tasks like deep learning and neural network processing. These chips are becoming more efficient and powerful, enabling faster and more energy-efficient AI computations.

- Inspired by the human brain, neuromorphic computing is an emerging field that aims to create AI systems that are more efficient and capable of handling complex tasks with minimal energy consumption. While still in its early stages, neuromorphic computing holds promise for the future of AI, potentially leading to breakthroughs in areas like robotics and autonomous systems.

How Space-O Technologies Can Be Your AI Co-Pilot

With over 14 years of experience, Space-O Technologies is your go-to partner for transforming your business through artificial intelligence. Our expertise spans across automating processes, streamlining workflows, forecasting risks, and maximizing cost efficiency, ensuring you achieve optimal results.

Our skilled AI developers excel in integrating advanced technologies into your solutions. Whether it’s implementing machine learning models, harnessing the power of big data, utilizing GPT (Generative Pre-trained Transformer) for sophisticated language processing, or applying NLP for insightful analytics, we provide 360-degree excellence in AI development.

We’re not just about off-the-shelf solutions; we tailor everything to fit your unique needs. So, if you’re ready to take your business to the next level with a custom AI-driven solution, let’s chat. We’re here to help you navigate the future, one AI-driven innovation at a time. Book your FREE 30-minute consultation for AI today:Contact Us

Frequently Asked Questions on AI Tech Stack

What are the benefits of using cloud platforms for AI?

Cloud platforms provide scalable and flexible computing resources, which are essential for handling the high demands of AI workloads. They allow you to pay for what you use, which can be more cost-effective than investing in on-premises hardware. Cloud platforms also offer various AI services and tools, making it easier to deploy and manage AI applications.

Can I integrate AI into my existing tech stack?

Yes, AI can often be integrated into an existing tech stack, but it may require adding or replacing certain components. For example, you might need to incorporate new data processing tools or machine learning frameworks.

What challenges might I face when building an AI tech stack?

Building an AI tech stack comes with challenges such as selecting the right tools, ensuring component compatibility, managing data quality, addressing scalability, and keeping the stack up to date, along with the need for a skilled team. Space-O Technologies simplifies this process, guiding throughout the AI journey. Our team stays current with the latest advancements and handles the complexities of your AI stack.

Build AI Solutions with Modern Tech Stack

What to read next