AI Software Development: A Complete Guide to Developing Custom AI Solutions

After the rise of closed consumer-grade artificial intelligence AI models like GPT-3, businesses have been intrigued at the possibility of stepping into custom AI software development. This interest is reflected by the projected growth of the AI engineering sector at 35% CAGR between 2023 and 2032.

The benefits of developing custom artificial intelligence solutions are well-documented where they have been shown to increase production speed and product quality across industries. However, since the process of AI development is deviant from regular software development operations, it requires special care and considerations to be successful.

But what kind of special attention do artificial intelligence projects require and how can companies begin developing custom solutions? This is what we will be exploring in this blog where we will outline and explore how development teams across the globe can get started on AI development with the utmost clarity.

Introduction to AI Software Development

AI software development is the process of developing a software solution that has AI technology at its center. Such a solution may be anything from a simple chatbot to a commercial grade classification model used in healthcare to detect pathogens. Creating a custom artificial intelligence-powered solution is no small undertaking though, as it involves a ton of computational resources and weeks of testing provided when you partner with an AI development services provider.

It also involves collecting loads of training data and processing it to ensure its viability in being used for the model. And like regular software development, there can be multiple development methodologies used when building the AI solution. Exploring these different development methodologies can give you a brief understanding of what the process of AI development is like.

Popular AI development methodologies

Depending on the scale and deployment needs of the project, different development methodologies are used. While all of these methodologies are suitable for building the same AI solution, they differ in their characteristics in how they deliver the final product. Some of these methodologies have a conclusive end, like waterfall, while the others are iterative processes that loop back after each cycle.

- Agile: One of the most popular development methodologies for quick and rapid developments, Agile works on the principle of iterative cycles. These cycles are client-oriented where developers quickly build features and components to deploy them to the core application, leading to faster development in short bursts.

- DevOps: A methodology that dictates the overall team culture, DevOps is not just a process but an entire work ethic. In a DevOps development cycle, the top priority is automation and quality testing. With detailed SOPs such as CI/CD, this methodology is perfect for large-scale projects where reliable deployments are critical.

- MLOps: Being an extension of the DevOps methodology, the MLOps cycle also prioritizes high quality deployments. The difference lies in the additional stages for developing machine learning models and data refinement. It promotes creating unique test cases for ML models and experimentation in general.

- Waterfall: Unlike the other development methodologies, the Waterfall approach is linear and sequential in nature. It works by developing the entire application and moving it through phases of testing and deployment till the final stage of maintenance. This gives it a conclusive end which is ideal for solutions that would benefit from a simple development process.

Scrum: Much like Agile, the Scrum approach to development works to deliver quick and concise deployments of individual components and features. The process works with a Scrum master who orchestrates the entire development cycle. Each development cycle is called a sprint, which lasts for around 2-4 weeks where developers choose which feature they will work on and deploy.

What makes AI software development different?

Apart from the core product, the main difference between the development cycle for an AI project and a normal software solution lies in the level of refinement required. With most normal solutions, there is no concern for discrepancies in the output as there are no external factors that disturb the functions of the application.

AI projects are intrinsically unpredictable as there is constant interaction with human intelligence; this dictates its effectiveness in resolving queries. At times when model drift is observed, the AI developer must interfere to ensure that there is no damage caused. All of this points towards a heightened need for stringent security testing standards and model refinement during the entire AI development process.

How to Develop Custom AI Software in 7 Steps

To make it easy for any development team to follow, we have laid out a universal process for developing any kind of AI solution using any development methodology. The steps are made to be modular and can be swapped with relative ease to comply with your development cycle. In our guide we will be glossing over everything from identifying the best AI use case, to deploying and maintaining the final AI solution. This framework applies equally well to AI-driven SaaS product development, where scalability, multi-tenancy, and continuous deployment are critical considerations.

Step 1: Value Identification

Before you start your AI development process, it is essential to identify where the value of AI would be the greatest within your operations. For example, a business within the logistics and supply chain industry, will most likely benefit from implementing process automation and image classification systems.

The value identification process is built to calculate the approximate cost savings enabled by AI and to figure out which AI model type would be the best suited for the KPIs. This process will also help you understand if a fully-fledged AI solution is required over a simple machine learning solution.

Various AI consulting firms offer value identification and validation as a standalone service to help businesses figure out the exact benefits of implementing a custom AI solution. The result of a comprehensive value identification process will also make it easier to navigate the initial stages of choosing an AI model for the project.

Need Help With Your AI Software Development Process?

Space-O is here to help. Our team of 80+ certified AI engineers and developers are always ready to handle your project for you without any intervention. Learn how you can build a reliable AI solution for your business by booking a free consulting call.

Step 2: AI Model Selection

The functionality and features of your AI software solution will primarily depend on the type of AI model that you choose to build on. Everything from text generation to image classification is based on a unique AI model. You may already know some popular models such as DALL.E 3 and Claude 2. The most common AI models can be classified into the following categories:

- Media classification

- Generative AI

- Recommendation systems

- Regression AI

- Anomaly detection

- Natural Language Processing (NLP)

- Reinforcement learning

All of these types of AI models use different deep learning algorithms to deliver a wide variety of results from media generation to target identification. Software engineers typically have a choice between using a pre-trained AI model to build upon or go the custom AI model development route. Both have their own benefits, but on the same scale, pre-trained AI models are often more affordable.

Even within pre-trained models, the emergence of open-source AI models such as Llama 2 have made large language models accessible and deployable on proprietary infrastructure. While they might not be as powerful as closed AI models that work using an API key, they certainly offer better security as all of the data stays within your system.

Step 3: App Architecture Development

Developing your application architecture with resilience in mind is essential for artificial intelligence projects. This is due to the dependencies that are anchored to the functions of the core AI model. Thus, loosely coupled components are your best bet for ensuring modularity while optimizing the application for observability and fault tolerance.

Since most generative AI models take up a lot of computing resources, it also increases the chances of your application running short on infrastructure capacity. To combat this, most AI products are often deployed on serverless instances from cloud service providers. Optimizing your application architecture with autoscaling resources can also greatly improve its capabilities while keeping ai development costs low.

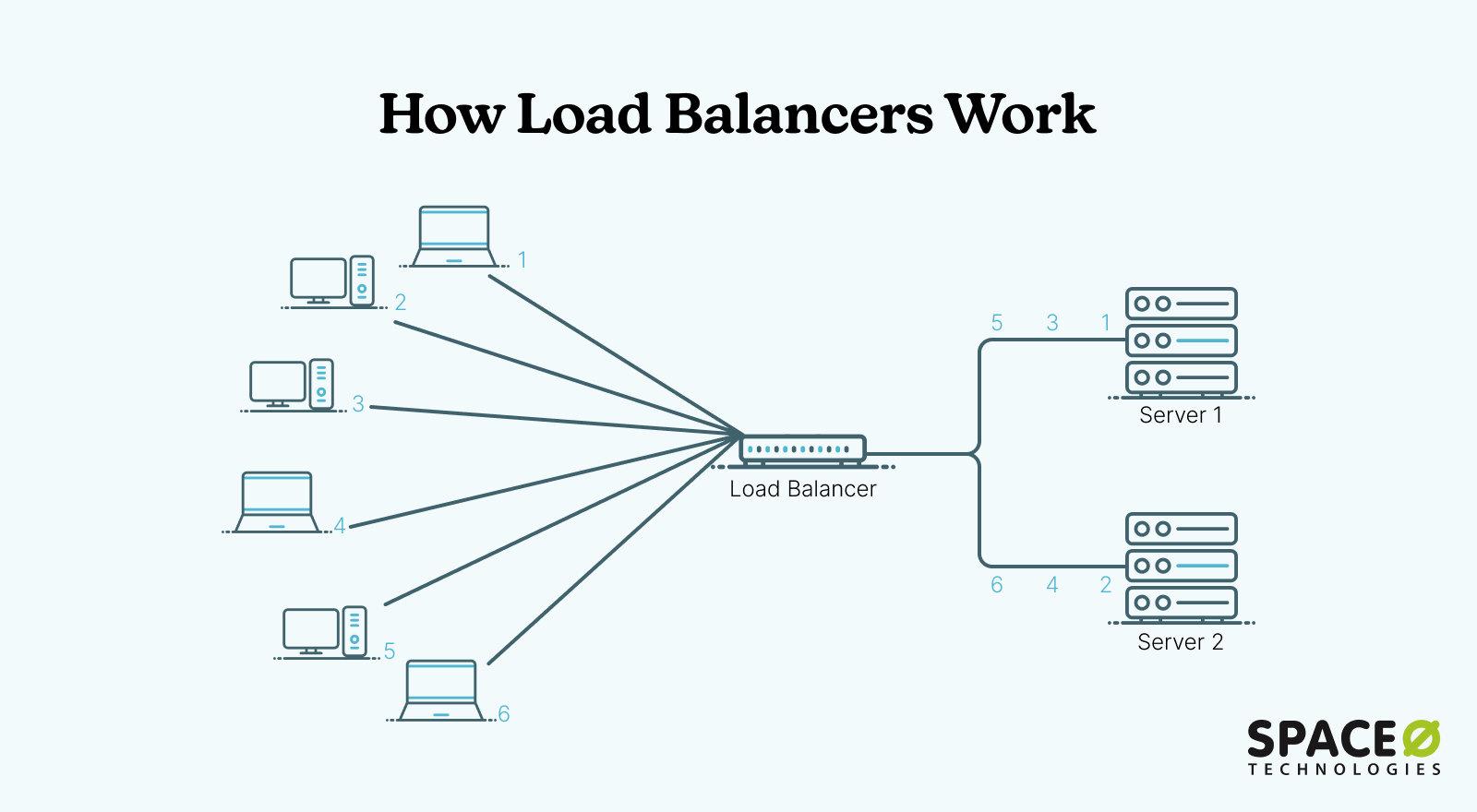

You will also have to figure out how your application will handle large volumes of user traffic. For this, you might want to deploy the AI solution in multiple availability zones or integrate load balancers to distribute traffic into multiple instances. If it is a locally-installed software solution, you may also add local caching features to help users access the application faster upon boot.

Step 4: Data Processing and Verification

The cycle of data processing is quite simple and starts once the data engineers have gathered a large pool of unorganized data. The first stage is data cleaning where any faulty data samples are either discarded or fixed using the appropriate values. These fixes can be anything from correcting typos within source files or just removing duplicates to raise the overall data quality.

Up next, the data goes into the transformation phase where all samples are merged into a unified file format to ensure compatibility. Extra identifiers are also encoded in this phase to ensure that classification groups can be created to keep the data organized. For instance, in label encoding, each group is given a numeric value.

Eg: “Cars = 1, Trucks = 2 , and Motorcycle = 3”

Usually, when preparing data, software developers also tend to group all of the media together and use that to train the model. However, this is far from ideal. Separating training data from test data allows you to accurately set benchmarks for the AI model to achieve. If the test data is included within the training data, the results of any test conducted would be pointless as it can source the testing data directly within its own database.

Step 5: UI/UX Development

Although UI/UX development might look simple at face-value, it is one of the most integral parts of your AI solution as it will determine user engagement rates and overall satisfaction. Because AI products are so dynamic, they need to boast designs that actively encourage users to experiment and make the most out of it.

The journey of UI/UX development does not get any easier if you are simply upgrading an existing app either, as the new solution would require brand-new insights into user behavior. For this, you can just use regular old feedback forms or even use automated solutions to access user engagement heatmaps from services such as Hotjar.

A/B testing is also crucial when finalizing the UI for your solution, as it allows you to compare multiple wireframes and iterations of your app layout to understand which one works the best. If you are developing an AI-powered web or mobile application, Google Firebase is an excellent tool to use for A/B testing as it has dedicated features to target different demographics on your application.

Step 6: Testing and Benchmarking

The testing and benchmarking stage is, undoubtedly, the most crucial part of the entire AI engineering process as it gives a conclusive result that reflects your software’s performance. The various standard application testing types are more than sufficient to measure if your solution is performing as expected. To understand how, let us understand what these standard testing types are.

| Test Type | Evaluation Criteria |

|---|---|

| Unit Testing | Tests individual components or functions of the AI system, such as specific algorithms or data processing functions, to ensure they work as intended in isolation. |

| Regression Testing | Ensures that new changes or updates to the AI system do not negatively impact existing functionalities. It helps confirm that the model’s performance remains consistent over time. |

| Integration Testing | Evaluates how different components of the AI system work together. For instance, testing how the AI model integrates with the application’s user interface or backend systems. |

| Functional Testing | Verifies that the AI system performs the functions it is supposed to, according to the requirements. This includes checking whether the model provides accurate predictions or classifications. |

| User Acceptance Testing (UAT) | Involves real users testing the AI system to ensure it meets their needs and expectations. This is crucial for validating the usability and effectiveness of the AI solution in real-world scenarios. |

| Stress Testing | Assesses the AI system’s performance under high load or stress conditions, such as handling large volumes of data or simultaneous user requests. This helps identify potential scalability issues. |

Once these tests have been conducted, the benchmarking process is initiated where the output quality and overall model performance is measured and documented. Based on the benchmarks, AI software development companies can adjust the model to be better at memory utilization or output speeds with the existing infrastructure.

Step 7: Deployment and Maintenance

Now comes the last stage, where the finished AI product is ready to be deployed into production. As mentioned before, it is best to deploy the final application on auto scaling cloud infrastructure to ensure the best chances of recovery and traffic distribution in scenarios of excessive demand. But the journey of an AI solution does not end at deployment.

Once deployed, the AI model has to keep up with modern expectations and to do so, it will have to be fine-tuned periodically. This is done to ensure that it has access to the latest relevant data sets and guidelines to do its job as usual.

Additional functionalities and features can also be added post deployment as long as the model is engineered to be flexible. Model weights can also be reconfigured to adapt to changing sensitivity requirements from customers.

Key Considerations Within AI Software Development

Now that you have learned how you can build your own AI software solution, it is time to look at a few factors to keep in mind before you embark on this exciting journey. These factors are detached from the core model development process; however, they still require a lot of attention as they can alter the performance of your project greatly. An AI consultant can help guide you through these complexities to ensure a smoother development process.

Cost

The costs for an AI software development cycle are often highly dynamic as there are many variables involved such as dataset size, model complexity and solution industry. But we can get a rough representation of how much your AI project would cost based on its type. The table given below explores how the cost rises based on the type of project being developed.

| Project Type | Development Time | Average Cost |

|---|---|---|

| Minimum Viable Product (MVP) | 2 Weeks – 6 Months | $10,000 – $150,000 |

| Proof of Concept (PoC) | 2-10 Weeks | $10,000 – $100,000 |

| Full-Scale Implementation | 2-12 Months | $25,000 – $300,000 |

| Fine-Tuning | 1-4 Weeks | $5,000 – $40,000 |

| Consulting | 2-10 Weeks | $20,000 – $80,000 |

Going with the approach of developing an MVP first allows you to scale costs according to the growth of your project, saving you from a large initial investment. Your development geography will also greatly dictate the cost of talent, as regions like South Asia offer top-notch AI development services at much lower costs.

Progressive fine-tuning

Much like with any other software solution, AI models also have to be updated with time to keep up with any modern trends or extra expectations. In most cases, the model is capable enough to function well on its own, with the limiting factor being training data. To remedy this, fine-tuning practices are implemented to train the existing model on newer datasets.

Additionally, fine-tuning can be carried out at any time to increase the accuracy of your AI model or even adapt it to perform new functions. To do this, methods such as transfer learning are used, where the AI model retains correlative data and training to emulate for the new task. This works for tasks where the function of the model largely remains the same. For instance, a surveillance computer vision model can be retrained to specifically detect wild animals.

Usually, retraining the model on more data works; however, in more drastic circumstances, the model weights can also be modified to make the solution behave in the intended manner. An example of modifying model weights would be modifying a natural language processing model’s thresholds to detect inappropriate inputs with less tolerance to protect the user.

Integration complexities

It is definitely a good practice to assume the chance of complexities arising whenever you plan AI integration for any new feature into an existing application. The chances of facing more complexities will only rise if you’re working with legacy applications being operated with on-premises infrastructure.

In such scenarios, a complete digital transformation is required to circumvent the limitations posed by local infrastructure and to cohesively integrate the new AI solution. Such a transition in infrastructure can lead your existing solutions to face some downtime to accommodate a smooth data migration process.

AI/ML Hallucinations

Know that almost all artificial intelligence and machine learning models are prone to experiencing hallucinations. During an AI/ML hallucination, the developed model will generate incorrect results or misclassify details. These incorrect results are generated as a result of underfitting the model caused by a lack of relevant training data.

This is why it is so important to spend time during the data preprocessing phase to ensure that you only train your model on a large amount of high-quality data. Moreover, modifying the model weights to decline requests that do not meet the model’s capabilities can help avoid instances of AI hallucinations, which can otherwise cause damage to the end user.

Compliance standards

One of the primary factors for consideration when building any software solution is its compliance with data protection guidelines in its deployment jurisdiction. This is especially true for AI software solutions as they often harvest their training data from public data sources. Given the uncertainty of the legalities around using copyrighted media for training AI models, it is best to have all of your licenses and certifications in check to avoid any liabilities in the future.

For some industries, not having the relevant certifications can mean that the product will never be deployed for public or industrial use. This is the case with most healthcare oriented software solutions where a wide variety of compliance standards have to be followed, such as HIPAA and ISO 27001, for the solution to be used safely by medical institutions.

FAQ

How long does it take to develop a complete AI solution?

With a team of 5 developers, the average full-scale implementation of an AI solution takes anywhere between 2-12 months. This includes everything from initial ideation to final user-ready deployment. These timelines are bound to be different for each project depending on the scale and integration requirements of your project along with the model type being used. This is because they can all influence how extensive each stage, such as data processing, will be.

Which programming languages are used for AI development?

Programming languages commonly used for AI development include Python, due to its extensive libraries (such as TensorFlow, PyTorch, and scikit-learn) and ease of use; R, for statistical analysis and data visualization; Java, which is utilized in large-scale systems and enterprise applications; C++, known for its performance efficiency in high-speed applications; Julia, appreciated for its high-performance capabilities in numerical and scientific computing; and Scala, used with frameworks like Breeze for scalable machine learning. Each language offers unique features and libraries suited to different aspects of AI development.

Are there any ways to reduce the cost of AI development?

Yes, there are many ways to reduce the cost of building a custom AI solution like using pre-trained models or outsourcing the entire project to a trusted development partner. The fact is, many AI projects already use pre-trained foundational models such as GPT-4 or Stable Diffusion 3. And partnering with an AI development company removes a lot of the stress and additional costs away from the equation as they handle the entire process with their expertise.

Want to Simplify the Process of Custom AI Development?

Allow Space-O to take care of your project’s requirements in terms of cutting-edge AI technology. With 14+ years of experience in delivering AI solutions for all industries, Space-O is the ideal AI development firm to partner with.

Conclusion

Through this blog you have learned about all of the steps and vagaries involved within the process of developing an AI solution. We also went over all of the additional factors to take into consideration before embarking on your journey to create a custom AI product. All of this empowers you to navigate the modern world with confidence and make informed business decisions.

But if the process of AI software development seems too complex, you can always rely on a trustworthy AI development partner such as Space-O. With over 14 years of experience, they excel at providing comprehensive AI-powered solutions for all major industries from healthcare, finance to eCommerce and many more.

Build Enterprise-Grade AI Software

What to read next